Autonomous Exploration for Multi-Agent Systems

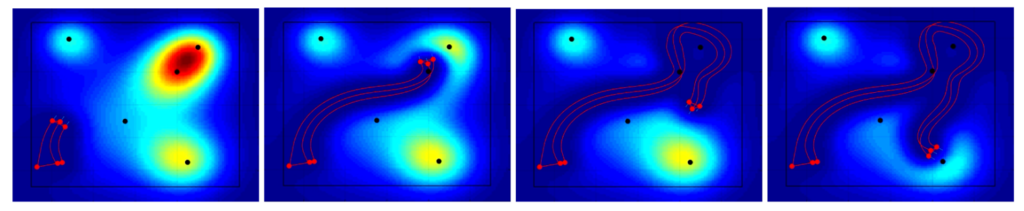

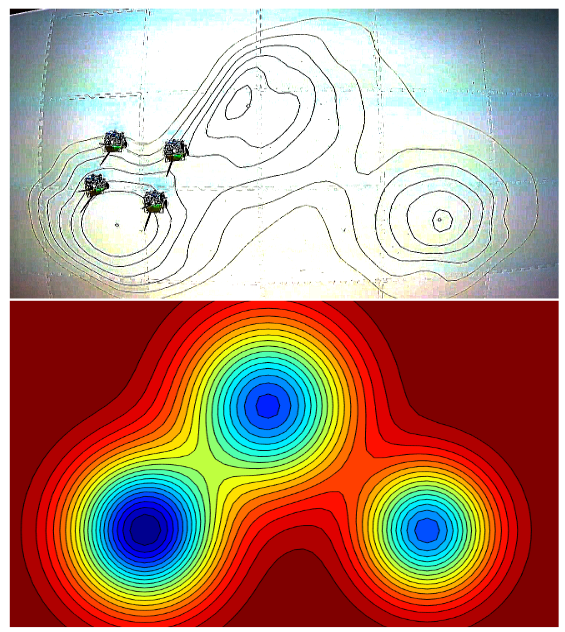

Scalar fields can be used to describe the spatial behavior of an environmental characteristic of interest. For example, oil spill concentrations or occupancy probabilities can be described as scalar functions that map from a multi-dimensional position to a single dimensional value. Such information is useful in providing intuition to a human operator about what future actions are necessary. As such, we propose a multi-agent exploration strategy to construct an approximation of an arbitrary scalar field using Gaussian Processes (GP). Our approach ensures agents follow the gradient of the GP’s estimation error and drives agents towards local maximums in the error. As a result, agents move towards areas of large error between the estimated GP and the true scalar field and can dramatically improve the GP’s prediction performance.

- Lin, Tony X., et al. “A Distributed Scalar Field Mapping Strategy for Mobile Robots.” 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, 2020.

Monocular Vision-based Localization and Pose Estimation with a Nudged Particle Filter and Ellipsoidal Confidence Tubes

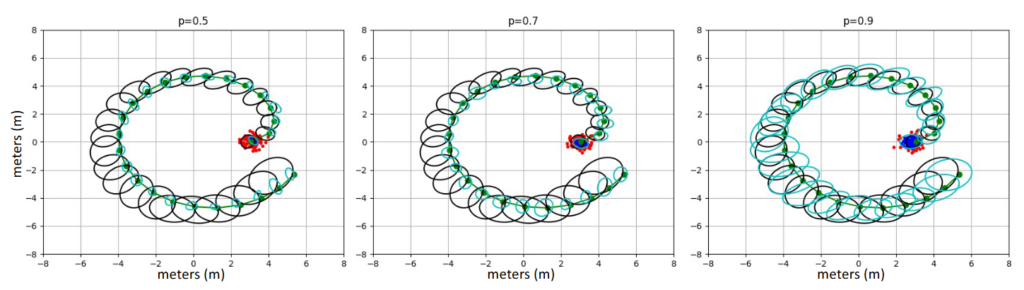

Monocular cameras are data-rich sensors capable of being used for a wide variety of applications related to target acquisition, localization, and relative motion estimation. In particular, these sensors can provide significant information about the global pose of a camera by matching acquired images with a database of previously acquired and labeled images. As such, we propose a filtering strategy using the particle filter to estimate the pose of a camera given a sequence of newly acquired images. Our approach leverages two convolutional neural networks to both predict the image associated with a given pose and the pose associated with a given image. The combination of both allows our filter to estimate the posterior distribution on the space of camera poses (leveraging the mean as our best estimate) and allows our filter to be robust to poor initialization conditions.

To further improve our trajectory’s position estimation performance, we also incorporate a smoothing strategy that allows us to refine our trajectory poses. Our approach leverages computed ellipsoids that cover p-percentage of particles at each iteration and computes a fusion between particles whenever a loop closure (when a previously seen landmark is seen again) is detected. Our proposed fusion approach is based on using the Dempster-Shafer Theory to combine particles according to the Dempster-Shafer Rule of Combination using the computed ellipsoids as mass allocation sets. The proposed strategy reduces the position estimation error of smoothed poses by 33%.

- Lin, Tony X., et al. “Monocular Vision-based Localization and Pose Estimation with a Nudged Particle Filter and Ellipsoidal Confidence Tubes.” To appear in 2022 Unmanned Systems Journal. World Scientific, 2022.